In past posts we’ve spoken about the importance of waiting and receiving significant results. That’s why we built this tool, to help you interpret your A/B test results in a simple way. Analyzing the data at the right time and giving your test the right amount of time to run can be the difference between a complete flop of a test and a grand success.

It’s not as complicated as it sounds. Our A/B test significant calculator allows you to easily compare the results of two versions of your pages and determine which is working best. You can also add it to your favourites and use it in future tests.

If you have been optimizing your site for some time now you probably already know and feel the amount of effort it takes to run a meaningful test, with actual scalable results.

Running tests is one, but knowing what led to the improvement and how, is another. Even if your conversions have increased, how do you know what caused that increase? Is the increase genuine or is it caused by a random change? To know if your results are real, you need to make a comparison between the page with the original elements (i.e the control) and the new page (the variation). Which is why you need to do A/B testing, wait for the results, make sure they are significant, analyze them and optimize.

How to Use the significant Tool

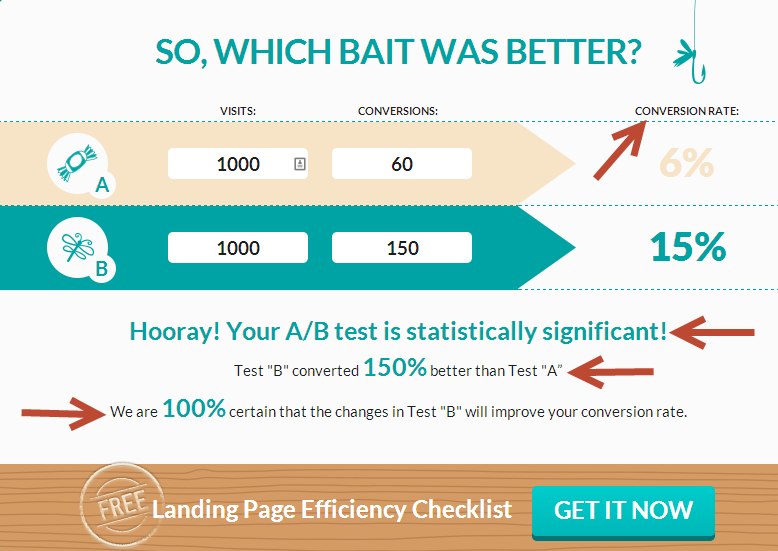

Using the tool is easy. Simply enter the number of visits and conversions for each of the pages, and let the calculator work its charm.

The calculator will tell you everything you need to know about your A/B test:

-

The conversion rate

-

Which of the pages won the test

-

By how much did that page beat the other

-

Whether your results are significant

-

The confidence level of the results.

How results can be misjudged

“One accurate measurement is worth more than a thousand expert opinions”

Admiral Grace Hopper.

Only if you use your A/B test correctly will you be able to make interpretations and draw conclusions from the results. However if your test results are not significant, it will be wrong to make any conclusions from the test. The significance of the test determines the likelihood of a result being a random occurrence. Statistical significance gives a proof beyond doubt that you’re A/B test results are not a chance occurrence. This is why any A/B test calculator should test for significance. Test results can be misjudged when for example you’re not running the test long enough, or when you haven’t calculated your sample size prior to the test. Read more about A/B mistakes you should avoid.

OK, so now you know how to use the A/B calculator and why significance is important. But how does the A/B test work exactly? To understand everything there is to know about A/B testing and significant results, read the following tips:

How A/B Testing works

Before planning your conversion test, start with a strategy. This strategy will help you decide which elements in your funnel need to be changed. Once you have your strategy well thought out you can begin creating your landing pages (or any other pages) and launch your test.

Why do A/B testing

As I’ve mentioned, A/B testing is a must if you want to be sure of the reasons that caused your conversion rate to change. A/B testing will tell you if the changes you’ve made actually have statistical significance or if they are a result of a random and inconsistent change.

Where should you test

There are many services and tools out there that allow you to set up an A/B test. Google Analytics, Optimizely and VWO to name a few. Each has its own strengths and weaknesses and you should try them and decide what works for you. The platform you use should allow easy test implementation and easy analysis.

Who should do an A/B test

You should! Anyone can setup an A/B test. As long as you follow guidelines you should be OK. Should you test small changes or immediately go for the big ones? I would say, if it’s your first test and you’re doing it on your own, start with a small change like the color of your buttons (remember that in order to see big increase you need to make big changes but to start out you can go for something simple). If you’re more experienced in doing A/B testing, you can get tips on how to test big changes in the next paragraph.

What can be tested (and what can go wrong)

Everything on your site can be tested, from the color of your call to action button, to your headlines, images, funnel and shopping cart. If you decide to test big changes and concepts, make sure these changes are a part of the strategy you built. By testing big changes, you will be able to learn much more from each test and then scale down to the impact of the button or headline.

When to test

One of the basic principles in A/B testing is the Timing. Comparing 2 versions isn’t enough, you should test them at the same time. Otherwise you wouldn’t know whether the changed conversion rate was a result of the changes you’ve made or the different timing. For example, it wouldn’t be a fair test to test a version of a Christmas cards site a week before Christmas, and test the second version a week after.

Another thing about timing is giving the test enough time to run. Remember, optimization requires time, and so does statistical significance.

Launching an AB test? Don’t forget to check out our landing page checklist before you start.

Click here to get more tips for uploading a conversion optimization test.

Are your A/B test Results Correct? 5.00/5 (100.00%) 5 votes

Related Posts

Are your A/B test Results Correct?

In past posts we’ve spoken about the importance of waiting and receiving significant results. That’s why we built this tool, to help you interpret your A/B test results in a simple way. Analyzing the data at the right time and giving your test the right amount of time to run can be the difference between a complete flop of a test and a grand success.

It’s not as complicated as it sounds. Our A/B test significant calculator allows you to easily compare the results of two versions of your pages and determine which is working best. You can also add it to your favourites and use it in future tests.

If you have been optimizing your site for some time now you probably already know and feel the amount of effort it takes to run a meaningful test, with actual scalable results.

Running tests is one, but knowing what led to the improvement and how, is another. Even if your conversions have increased, how do you know what caused that increase? Is the increase genuine or is it caused by a random change? To know if your results are real, you need to make a comparison between the page with the original elements (i.e the control) and the new page (the variation). Which is why you need to do A/B testing, wait for the results, make sure they are significant, analyze them and optimize.

How to Use the significant Tool

Using the tool is easy. Simply enter the number of visits and conversions for each of the pages, and let the calculator work its charm.

The calculator will tell you everything you need to know about your A/B test:

The conversion rate

Which of the pages won the test

By how much did that page beat the other

Whether your results are significant

The confidence level of the results.

How results can be misjudged

“One accurate measurement is worth more than a thousand expert opinions”

Admiral Grace Hopper.

Only if you use your A/B test correctly will you be able to make interpretations and draw conclusions from the results. However if your test results are not significant, it will be wrong to make any conclusions from the test. The significance of the test determines the likelihood of a result being a random occurrence. Statistical significance gives a proof beyond doubt that you’re A/B test results are not a chance occurrence. This is why any A/B test calculator should test for significance. Test results can be misjudged when for example you’re not running the test long enough, or when you haven’t calculated your sample size prior to the test. Read more about A/B mistakes you should avoid.

OK, so now you know how to use the A/B calculator and why significance is important. But how does the A/B test work exactly? To understand everything there is to know about A/B testing and significant results, read the following tips:

How A/B Testing works

Before planning your conversion test, start with a strategy. This strategy will help you decide which elements in your funnel need to be changed. Once you have your strategy well thought out you can begin creating your landing pages (or any other pages) and launch your test.

Why do A/B testing

As I’ve mentioned, A/B testing is a must if you want to be sure of the reasons that caused your conversion rate to change. A/B testing will tell you if the changes you’ve made actually have statistical significance or if they are a result of a random and inconsistent change.

Where should you test

There are many services and tools out there that allow you to set up an A/B test. Google Analytics, Optimizely and VWO to name a few. Each has its own strengths and weaknesses and you should try them and decide what works for you. The platform you use should allow easy test implementation and easy analysis.

Who should do an A/B test

You should! Anyone can setup an A/B test. As long as you follow guidelines you should be OK. Should you test small changes or immediately go for the big ones? I would say, if it’s your first test and you’re doing it on your own, start with a small change like the color of your buttons (remember that in order to see big increase you need to make big changes but to start out you can go for something simple). If you’re more experienced in doing A/B testing, you can get tips on how to test big changes in the next paragraph.

What can be tested (and what can go wrong)

Everything on your site can be tested, from the color of your call to action button, to your headlines, images, funnel and shopping cart. If you decide to test big changes and concepts, make sure these changes are a part of the strategy you built. By testing big changes, you will be able to learn much more from each test and then scale down to the impact of the button or headline.

When to test

One of the basic principles in A/B testing is the Timing. Comparing 2 versions isn’t enough, you should test them at the same time. Otherwise you wouldn’t know whether the changed conversion rate was a result of the changes you’ve made or the different timing. For example, it wouldn’t be a fair test to test a version of a Christmas cards site a week before Christmas, and test the second version a week after.

Another thing about timing is giving the test enough time to run. Remember, optimization requires time, and so does statistical significance.

Launching an AB test? Don’t forget to check out our landing page checklist before you start.

Click here to get more tips for uploading a conversion optimization test.

Related Posts

Tags: